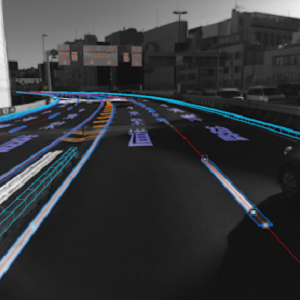

We have collected a vision benchmark suite to detect the static and dynamic objects in the surrounding of our vehicle. The collected dataset exhibits diverse Asian traffic scenarios collected in peak to fewer traffic hours.

This dataset is collected to perform object detection and recognition for the environment perception of self-driving vehicles. It is created over the visual video sequences and 3D point-cloud (1100 KM) of around 80+ cities including dense traffic roads, urban, rural, highways, hilly areas, and motorways.

We have annotated 20,000 images with approximately 55,000 labels presented in 2 types of formats (i.e., Yolo (.txt) and VOC PASCAL (.xml)). This dataset contains diverse class categories including 2-Wheeler (Motorbikes), 3- Wheeler (Rickshaw), and 4+ Wheeler (Cars, Buses, and Trucks).